Army set to issue new policy guidance on use of large language models

The Army is close to issuing a new directive to help guide the department’s use of generative artificial intelligence and, specifically, large language models, according to its chief information officer.

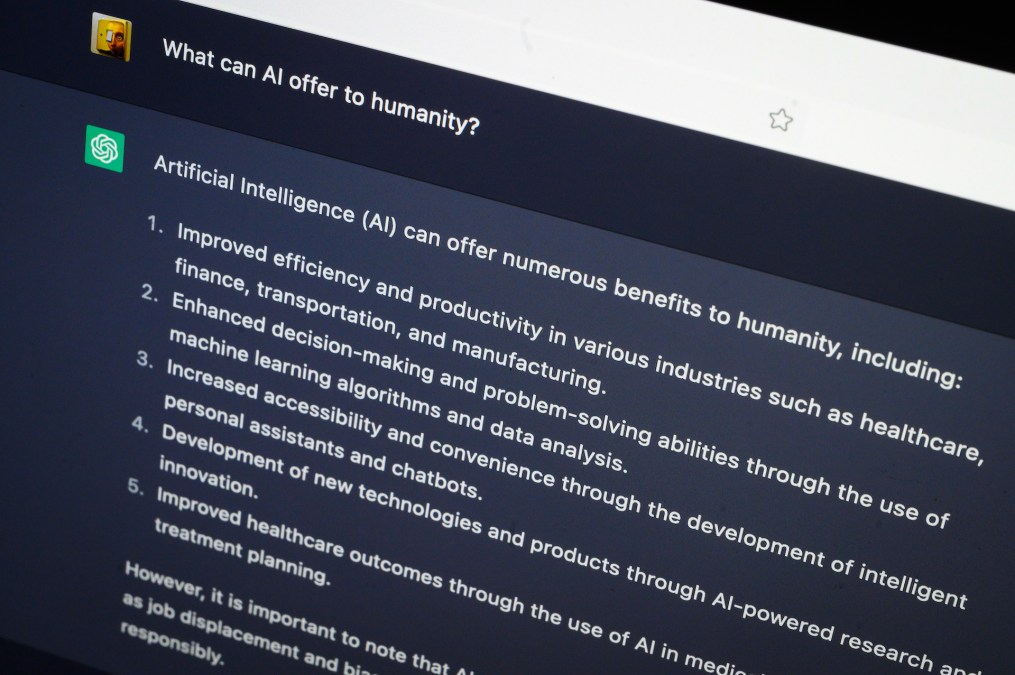

LLMs, which can generate content — such as text, audio, code, images, videos and other types of media — based on prompts and data they are trained on, have exploded in popularity with the emergence of ChatGPT and other commercially available tools. Pentagon officials aim to leverage generative AI capabilities, but they want solutions that won’t expose sensitive information to unauthorized individuals. They also want technology that can be tailored to meet DOD’s unique needs.

“Definitely looking at pushing out guidance here, hopefully in the next two weeks, right — no promises right now, because it’s still in some staffing — on genAI and large language models,” Army CIO Leo Garciga said Thursday during a webinar hosted by AFCEA NOVA. “We continue to see the demand signal. And though [there is] lots of immaturity in this space, we’re working through what that looks like from a cyber perspective and how we’re going to treat that. So we’re gonna have some initial policy coming out.”

The CIO’s team has been consulting with the Office of the Assistant Secretary of the Army for Acquisition, Logistics and Technology as it fleshes things out.

“We’ve been working with our partners at ASAALT to kind of give some shaping out to industry and to the force so we can get a little bit more proactive in our experimentation and operationalization of that technology,” Garciga said.

Pentagon officials see generative AI as a tool that could be used across the department, from making back-office functions more efficient to aiding warfighters on the battlefield.

However, there are security concerns that need to be addressed.

“LLMs are awesome. They’re huge productivity boosters. They allow us to get a lot more work done. But they are very new technology … In my view, we are definitely in an AI bubble. Right? When you look kind of across industry, everybody’s competing to try to get their best, you know, LLM out there as quickly as possible. And by doing that, we have some gaps. I mean, we just do. And so it’s very important that we not take an LLM that is out on, you know, the web that I can just go and log into and access and put our data into it to try to get responses,” said Jennifer Swanson, deputy assistant secretary of the Army for data, engineering and software.

Doing so risks having the Army’s sensitive data bleed into the public domain via the internet and training models that adversaries could access, she noted.

“That’s really not OK, it’s very dangerous. And so we are looking at what we can do internally, within, you know, [Impact Level] 5, IL6, whatever boundaries, different boundaries that we have out there … And we’re moving as quickly as we can. And we definitely want tools within that space that our folks can use and our developers can use, but you know it’s not going to be the tools that are out there on the internet,” she added.

The forthcoming policy guidance is expected to address security concerns.

“I think folks are really concerned out in industry. And we’re getting a lot of feedback on, you know, just asking us what we think the guidance is going to look like. But we’re going to focus on putting some guardrails and some left and right limits … We’ve really focused on letting folks know, hey, this space is open for use. If you do have access to an LLM, right, make sure you’re putting the right data in there, make sure you understand what the left and right limits [are] … Don’t put, you know, an [operation order] inside public ChatGPT — probably not a good idea, right? Believe it or not, things like that are probably happening,” Garciga said.

“I think we really want to focus on making sure that it’s a data-to capability piece, and then add some depth for our vendors where we start putting a little bit of a box around, [if] I’m going to build a model for the U.S. government, what does it mean to for me to build it on prem in my corporate headquarters? What does that look like? … What is that relationship? Because that’s going to drive contracts and a bunch of other things. We’re going to start the initial wrapping of what that’s going to look like in our initial guidance memo so we can start having a more robust conversation in this space. But it’s really going to be focused around mostly data protection … and what we think the guardrails needs to be and what our interaction between the government and industry is going to look like in this space,” he added.