Army says it’s using AI to help produce doctrine, but acknowledges the technology’s flaws

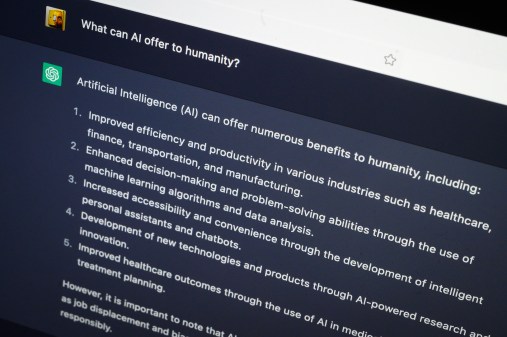

The Army is incorporating artificial intelligence tools to help write doctrine, the service said Wednesday.

The Combined Arms Doctrine Directorate, the Army’s hub for producing foundational publications meant to guide how soldiers operate, is training doctrine writers to “apply approved AI tools to their work immediately” — to include idea generation, according to a service press release.

The military has been aggressively applying large language models across virtually all parts of the force as Pentagon officials tout the emerging tech as a boon to operations. Experts have extensively warned of AI’s risks to society and military use, including its tendency to fabricate or “hallucinate” information that risks reducing public trust in institutions.

In the release, the Army acknowledged AI’s “critical flaws in a field where accuracy is paramount,” specifically its propensity to invent facts or “confuse source materials,” but said that it is improving over time and would not be used as a “crutch” for doctrine writers.

In one case the Army noted that an AI tool used an outdated manual when writers were developing a doctrine test, “an error that was only caught because the user creating the test was an expert” on the topic.

“You treat it like a resourceful and motivated young officer who might not know all the information, but they can certainly assist you in cutting some corners and being a little more efficient,” said Lt. Col. Scott McMahan, a military doctrine writer, according to the release.

The service said changes have been minimal so far, but writers have used the technology to search “hundreds of texts for historical vignettes that illustrate a complex doctrinal point” to save time.

“The large language model tools under development now have access to the databases we needed access to in the past,” said CADD Director Richard Creed, Jr. “Access to the data is the foundational measure of whether the tools are useful to us.”

It was unclear which data the unnamed AI capabilities were pulling from. Information security has been a top concern from experts in this field. DefenseScoop recently reported that the military’s EOD top technology authority warned its bomb technicians against uploading highly-guarded technical material into AI models, including Pentagon platforms.

The artificial intelligence tools are also intended to assist with grammar, readability and idea generation for doctrine.

“We were looking for some more meat for an idea,” McMahan said. “We were able to feed this tool some initial thoughts, and of the three paragraphs it spit out, one sentence was used, but that was a really powerful and useful sentence.”

Officials developed a “four-pronged strategy” to train Army doctrine writers on AI capabilities, according to the service. That plan includes embedding a “master gunner” — someone who is already trained in using large language models — to assist writers in using the tech.

“We made it perfectly clear that AI tools were not intended to be a crutch for not doing the work we expect from our people,” Creed said. “Humans will review every line of what an LLM produces for accuracy. To make sure that happens one must make sure your people know their business.”