Intelligence community working with private sector to understand impacts of generative AI

As the possibilities and potential threats of generative artificial intelligence grow, the United States intelligence community is looking to engage with the private sector to help them assess the technology, according to U.S. Director of National Intelligence Avril Haines.

“We’ve been writing some analysis to try to look at what the potential impact is on society in a variety of different realms, and obviously we see some impact in intelligence activities,” Haines told the Senate Armed Services Committee on Thursday. “What we also recognize is that we do not yet have our hands around what the potential is.”

Generative AI is an emerging subfield of artificial intelligence that uses large language models to turn prompts from humans into AI-generated audio, code, text, images, videos and other types of media. Platforms that leverage the technology — like ChatGPT, BingAI and DALL-E 2 — have gone viral in recent months.

While leaders within the U.S. government have acknowledged the technology’s usefulness to assist workers, some have expressed concern over how generative AI will affect daily life and have called for better collaboration with industry to help understand its capabilities.

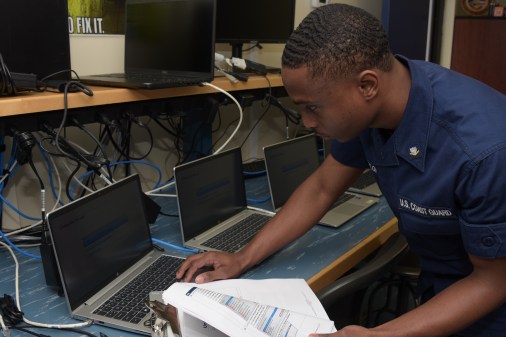

Within the intelligence community, Haines said that many organizations have assembled task forces made of experts in the field of artificial intelligence to comprehend the technology’s impact.

“We have been trying to facilitate groups of experts in the IC to essentially connect with those in the private sector who are on the cutting edge of some of these developments, so that we can make sure that we understand what they see as potential uses and developments in this area,” she added.

Haines’ comments came on the same day as a meeting between Vice President Kamala Harris and the CEOs of Alphabet, Anthropic, Microsoft and OpenAI — four pioneers of generative AI. The meeting is intended “to underscore this responsibility and emphasize the importance of driving responsible, trustworthy, and ethical innovation with safeguards that mitigate risks and potential harms to individuals and our society,” according to a White House fact sheet on new administration actions to promote responsible AI innovation.

In addition, Anthropic, Google, Hugging Face, Microsoft, NVIDIA, OpenAI and Stability AI have announced that they will open their large language models to red-teaming at the upcoming DEF CON hacking conference in Las Vegas as part of the White House initiative. The event will be the first public assessment of large language models, a senior administration official told reporters on condition of anonymity.

“Of course, what we’re drawing on here, red-teaming has been really helpful and very successful in cybersecurity for identifying vulnerabilities,” the official said. “That’s what we’re now working to adapt for large language models.”

Haines has not been the first leader within the U.S. government to call for better collaboration with industry on generative AI. For example, Defense Information Systems Agency Director Lt. Gen. Robert Skinner pleaded with industry on Tuesday to help them understand how they could leverage the technology better than adversaries.

“Generative AI, I would offer, is probably one of the most disruptive technologies and initiatives in a very long, long time,” Skinner said during a keynote speech at AFCEA’s TechNet Cyber conference in Baltimore. “Those who harness that [and] that can understand how to best leverage it … are going to be the ones that have the high ground.”

As the technology continues to evolve at a rapid pace, some experts in industry have advocated for a temporary ban on generative AI so that vendors and regulators can have time to create standards for its use. But others at the Pentagon have argued against such a moratorium.

“Some have argued for a six-month pause — which I personally don’t advocate towards, because if we stop, guess who’s not going to stop: potential adversaries overseas. We’ve got to keep moving,” Department of Defense Chief Information Officer John Sherman said Wednesday at the AFCEA TechNet Cyber conference in Baltimore.