NGA launches new training to help personnel adopt AI responsibly

Artificial intelligence and machine learning adoption will increasingly disrupt and revolutionize the National Geospatial-Intelligence Agency’s operations, so leaders there are getting serious about helping personnel responsibly navigate the development and use of algorithms, models and associated emerging technologies.

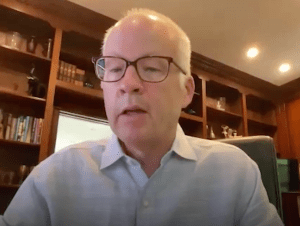

“I think the blessing and curse of AI is that it’s going to think differently than us. It could make us better — but it can also confuse us, and it can also mislead us. So we really need to have ways of translating between the two, or having a lot of understanding about where it’s going to succeed and where it’s going to fail so we know where to look for problems to emerge,” NGA’s first-ever Chief of Responsible Artificial Intelligence Anna Rubinstein recently told DefenseScoop.

In her inaugural year in that nascent position, Rubinstein led the making of a new strategy and instructive platform to help guide and govern employees’ existing and future AI pursuits. That latter educational tool is called GEOINT Responsible AI Training, or GREAT.

“So you can be a ‘GREAT’ developer or a ‘GREAT’ user,” Rubinstein said.

During a joint interview, she and the NGA’s director of data and digital innovation, Mark Munsell, briefed DefenseScoop on their team’s vision and evolving approach to ensuring that the agency deploys AI in a safe, ethical and trustworthy manner.

Irresponsible AI

Geospatial intelligence, or GEOINT, encompasses the discipline via which imagery and data is captured from satellites, radar, drones and other assets — and then analyzed by experts to visually depict and assess physical features and specific geographically referenced activities on Earth.

Historically, NGA has a reputation as the United States’ secretive mapping agency.

One of its main missions now (which is closely guarded and not widely publicized) involves managing the entire AI development pipeline for Maven, the military’s prolific computer vision program.

“Prior to this role, I was the director of test and evaluation for Maven. So I got to have a lot of really cool experiences working with different types of AI technologies and applications, and figuring out how to test it at the level of the data models, systems and the human-machine teams. It was just really fun and exciting to take it to the warfighter and see how they are going to use this. We can’t just drop technology in somebody’s lap — you have to make sure the training and the tradecraft is there to support it,” Rubinstein noted.

While she was a contractor in that role, that Maven expertise is now deeply informing her approach to the new, permanent position within the federal agency that she was tapped for.

“I’m trying to leverage all that great experience that I had on Maven to figure out how we can build enterprise capabilities and processes to support NGA — in terms of training people to make sure they understand how to develop and use AI responsibly — to make sure at the program level we can identify best practices and start to distill those into guidelines that programs can use to make sure they can be interoperable and visible to each other, to make sure that we’re informing policy around how to use AI especially in high-risk use cases, and to make sure we’re bringing NGA’s expert judgment on the GEOINT front into that conversation,” Rubinstein explained.

Inside the agency, she currently reports to Mark Munsell, an award-winning software engineer and longtime leader at NGA.

“It’s always been NGA’s responsibility to teach, train and qualify people to do precise geo-coordinate mensuration. So this is a GEOINT tradecraft to derive a precision coordinate with imagery. That has to be practiced in a certain way so that if you do employ a precision-guided munition, you’re doing it correctly,” he told DefenseScoop.

According to Munsell, a variety of timely factors motivated the agency to hire Rubinstein and set up a new team within his directorate that focuses solely on AI assurance and workforce development.

“The White House said we should do it. The Department of Defense said we should do it. So all of the country’s leadership thinks that we should do it. I will say, too, that the recognition of both the power of what we’re seeing in tools today and trying to project the power of those tools in five or 10 years from now, says that we need to be paying attention to this now,” Munsell told DefenseScoop.

Notably, the establishment of NGA’s AI assurance team also comes as the burgeoning field of geoAI — which encompasses methods combining AI and geospatial data and analysis technologies to advance understanding and solutions for complex, spatial problems — rapidly evolves and holds potential for drastic disruption.

“We have really good coders in the United States. They’re developing really great, powerful tools. And at any given time, those tools can be turned against us,” Munsell said.

DefenseScoop asked both him and Rubinstein to help the audience fully visualize what “irresponsible” AI would look like from NGA’s purview.

Munsell pointed to the techno-thriller film from 1983, WarGames.

In the movie, a young hacker accesses a U.S. military supercomputer named WOPR — or War Operation Plan Response — and inadvertently triggers a false alarm that threatens to ignite a nuclear war.

“It’s sort of the earliest mention of artificial intelligence in popular culture, even before Terminator and all that kind of stuff. And of course, WOPR decides it’s time to destroy the world and to launch all the missiles from the United States to Russia. And so it starts this countdown, and they’re trying to stop the computer, and the four-star NORAD general walks out and says, ‘Can’t you just unplug the damn thing?’ And the guy like holds a wire and says, ‘Don’t you think we’ve tried that!’” Munsell said.

In response, Rubinstein also noted that people will often ask her who is serving as NGA’s chief of irresponsible AI, which she called “a snarky way of asking a fair question” about how to achieve and measure responsible AI adoption.

“You’re never going to know everything [with AI], but it’s about making sure you have processes in place to deal with [risk] when it happens, that you have processes for documenting issues, communicating about them and learning from them. And so, I feel like irresponsibility would be not having any of that and just chucking AI over the fence and then when something bad happens, being like ‘Oops, guess we should have [been ready for] that,’” she said.

Munsell added that in his view, “responsible AI is good AI, and it’s war-winning AI.”

“The more that we provide quality feedback to these models, the better they’re going to be. And therefore, they will perform as designed instead of sloppy, or instead of with a bunch of mistakes and with a bunch of wrong information. And all of those things are irresponsible,” he said.

‘Just the beginning’

Almost immediately after Rubinstein joined NGA as responsible AI chief last summer, senior leadership asked her to oversee the production of a plan and training tool to direct the agency’s relevant technology pursuits.

“When one model can be used for 100 different use cases, and one use case could have 100 different models feeding into it, it’s very complicated. So, we laid out a strategy of what are all the different touchpoints to ensure that we’re building AI governance and assurance into every layer,” she said.

The strategy she and her team created is designed around four pillars. Three of those cover AI assurance at scale, program support, and policies around high-risk use cases.

“And the first is people — so that’s GREAT training,” Rubinstein told DefenseScoop.

The ultimate motivation behind the new training “is to really bring it home to AI practitioners about what AI ethics means and looks like in practice,” she added.

And the new resources her team is refining aim to help distill high-level principles down into actionable frameworks for approaching real-world problems across the AI lifecycle.

“It’s easy to say you want AI to be transparent and unbiased, and governable and equitable. But what does that mean? And how do you do that? How do you know when you’ve actually gotten there?” Rubinstein said.

In order to adequately address different needs across the two groups, there’s two versions of the GREAT training: one for AI developers and another for AI users.

“The lessons take you somewhat linearly through the development process — like how you set requirements, how you think about data, models, systems and deployment. But then the scenario has a capstone that happens at the end, drops you into the middle of a scenario. There’s a problem, you’re on an AI red team, people have come to you to solve this issue. These are the concerns about this model. And they’re three rounds, and each round has a plot twist,” Rubinstein explained.

“So it’s, we’re giving students a way to start to think about what that’s going to look like within their organizations and broadly, NGA — and even broader in the geospatial community,” she said.

Multiple partners, including Penn State Applied Research Lab and In-Q-Tel Labs, have supported the making of the training so far.

“We got the GREAT developer course up and running in April, we got the GREAT user course up and running in May. And then beyond that, we will be thinking about how we scale this to everyone else and make sure that we can offer this beyond [our directorate] and beyond NGA,” Rubenstein said.

Her team is also beginning to discuss “what requirements need to look like around who should take it.”

Currently, everyone in NGA’s data and digital innovation directorate is required to complete GREAT. For all other staff, it’s optional.

“The closer they are to being hands-on-keyboard with the AI — either as a producer or consumer — the more we’ll prioritize getting them into classes faster,” Rubenstein noted.

Munsell chimed in: “But the training is just the beginning.”

Moving forward, he and other senior officials intend to see this fresh process formalized into an official certification.

“We want it to mean something when you say you’re a GREAT developer or a GREAT user. And then we want to be able to accredit organizations to maintain their own GEOINT AI training so that we can all be aligned on the standards of our approach to responsible GEOINT AI, but have that more distributed approach to how we offer this,” Rubinstein told DefenseScoop. “Then, beyond that, we want to look at how we can do verification and validation of tools that also support the GEOINT AI analysis mission.”

Updated on June 18, 2024, at 8:05 PM: This story has been updated to reflect a clarification from NGA about how it spells the acronym it uses for its GEOINT Responsible AI Training tool.