How DOD will help agencies comply with the White House’s new rules for AI in national security

Chief Digital and Artificial Intelligence Office technologists who produced the Pentagon’s first “Responsible AI” toolkits are set to help steer multiple activities that will contribute to the early implementation of the new White House memo guiding federal agencies’ adoption of trustworthy algorithms and models for national security purposes, according to defense officials involved.

Acting Deputy CDAO for Policy John Turner briefed DefenseScoop on those plans and more — including near-term implications for the Defense Department’s autonomous weapons-governing rules — in an exclusive interview at the RAI in Defense Forum on Tuesday.

“I actually co-led the meeting this morning with the Office of the Under Secretary of Defense for Policy” about the National Security Memorandum (NSM) on AI issued by President Biden last week, Turner explained.

By directive, that undersecretariat is the department’s interface with the White House National Security Council.

“But given the substance and the content of what the NSM is driving, we are co-leading the coordination of all of the deliverables from the NSM together with [the Office of the Under Secretary of Defense for Policy]. So, we held the kickoff meeting with representatives from across the whole department to level-set on our approach, how we’re going to communicate. And given the 66 different actions that are from the framework, the NSM, and its classified annex, we want to make sure that we’re organized and we have the right people on the right tasks,” Turner told DefenseScoop.

As he highlighted, the new NSM and framework lay out a path ahead and dozens of directions for how the U.S. government should appropriately harnessing AI-powered technologies and associated models “especially in the context of national security systems (NSS), while protecting human rights, civil rights, civil liberties, privacy, and safety in AI-enabled national security activities.”

A number of the memo’s provisions are geared toward the DOD and the intelligence community.

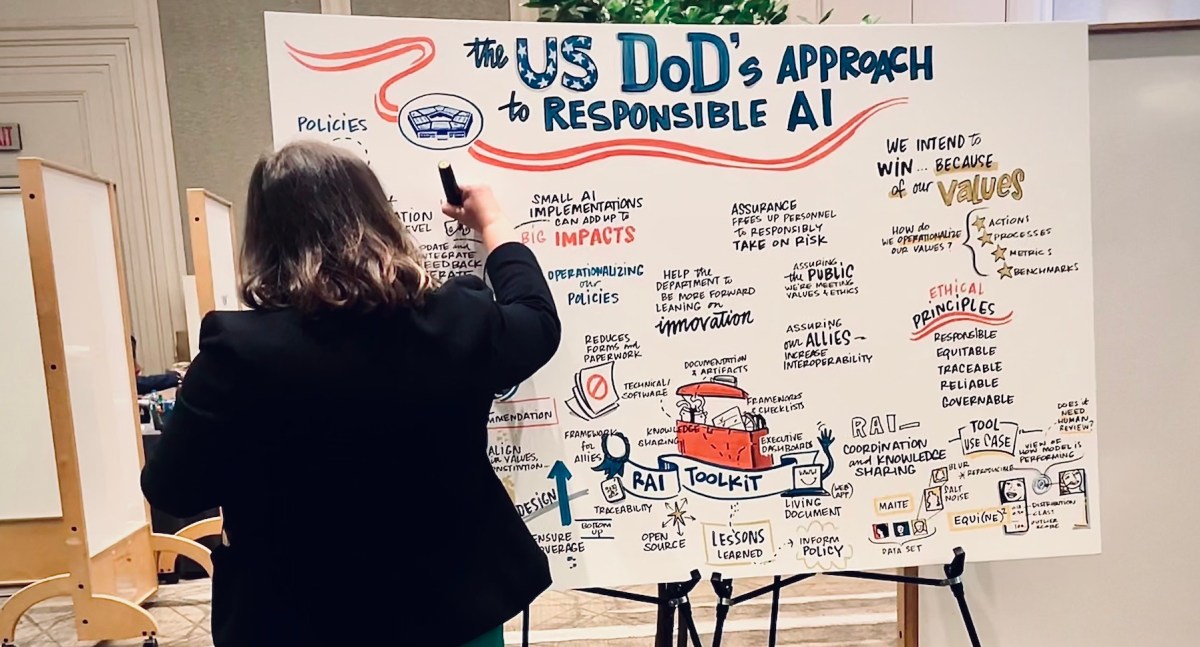

During an onstage demo and presentation on the CDAO’s toolkits at Tuesday’s forum, the office’s RAI Chief Matthew Johnson said his team is now working on interagency processes and digital mechanisms that government entities can use to demonstrate how their AI capabilities meet requirements set by the new NSM and related executive orders.

Turner later told DefenseScoop that Johnson was referring to work that’s being steered by one of four working groups under the Federal Chief AI Officer Council, which is led by the White House Office of Science and Technology and the Office of Management and Budget.

“One of those working groups is defining the minimum risk practices that the whole of the federal government will implement, and [the DOD] is very pleased that Dr. Johnson was asked to help lead that group,” Turner said.

Part of this work will involve generating new technical tools and the principles that the CDAO hopes to package in a way that officials view as most useful for real-world AI developers.

“There’s plenty of resources that developers can access in order to help identify bias, or understand how their dataset may be useful for their objective or outcome that they’re working towards. But how all of those resources get combined in a way that is intuitive, and then outputs a body of evidence that then those developers can take to their approving authorities and say, ‘Indeed, this is responsible capability’ — that is something that the RAI team within DOD has worked really hard on,” Turner noted.

“So, it’s been that work that is now starting to find additional footing in additional circles, like at the federal level” and by international partners, he added.

The toolkit and its variety of resources that Johnson’s team created and continues to refine, essentially provide interactive guidance and mapping that translates DOD’s ethical principles for AI adoption to “specific technical resources that can be employed as a model that’s being developed and along the model’s so-called life-cycle, which is not intuitively obvious to everyone at every level,” Turner said.

Without sharing many details, Turner confirmed to DefenseScoop that the Pentagon will be re-visiting its recently updated policy for “Autonomy in Weapon Systems,” Directive 3000.09, to fully ensure it complies with the administration’s recent AI directions.

“I would just offer that the NSM and the [OMB memo on AI, M-24-10] largely focus on risk practices and associated reporting for ‘covered AI’ [that’s] generally rights- and safety-impacting AI and high-impact use cases. And so it is underneath that umbrella that we would see a smaller subset of these use cases and of these technologies. And lethal autonomous weapon systems would be one of those smaller categories underneath that umbrella,” he said.

Turner subsequently issued the following statement to DefenseScoop: “DoD Directive 3000.09 ‘Autonomy in Weapon Systems,’ was updated in January 2023 and provides policy and responsibilities for developing and using autonomous and semi-autonomous functions in weapon systems. The approval processes based on this Directive are well positioned to incorporate the minimum risk practices outlined in the AI National Security Memorandum.”

The artificial intelligence policy chief also expressed his view that the new NSM’s spotlight on advanced models and the uncertain and emerging field of generative AI is already driving “a more focused conversation around resourcing” across DOD components.

“There is certainly leadership attention and resourcing now that I think is in a much stronger place than it was before the NSM, largely in part due to the tremendous interagency work and articulation around the moment that we’re in and why we need to rise to this occasion,” Turner said.

Updated on Nov. 5, 2024, at 12:05 PM: This story has been updated to include an additional statement from John Turner about DOD Directive 3000.09, that was provided on Nov. 5.